A Style-Based Generator Architecture for Generative Adversarial Networks

WHY?

High quality disentangled generation of images has been the goal for all the generative models. This paper suggests style-based generator architecture for GAN with techniques borrowed from the field of style transfer.

WHAT?

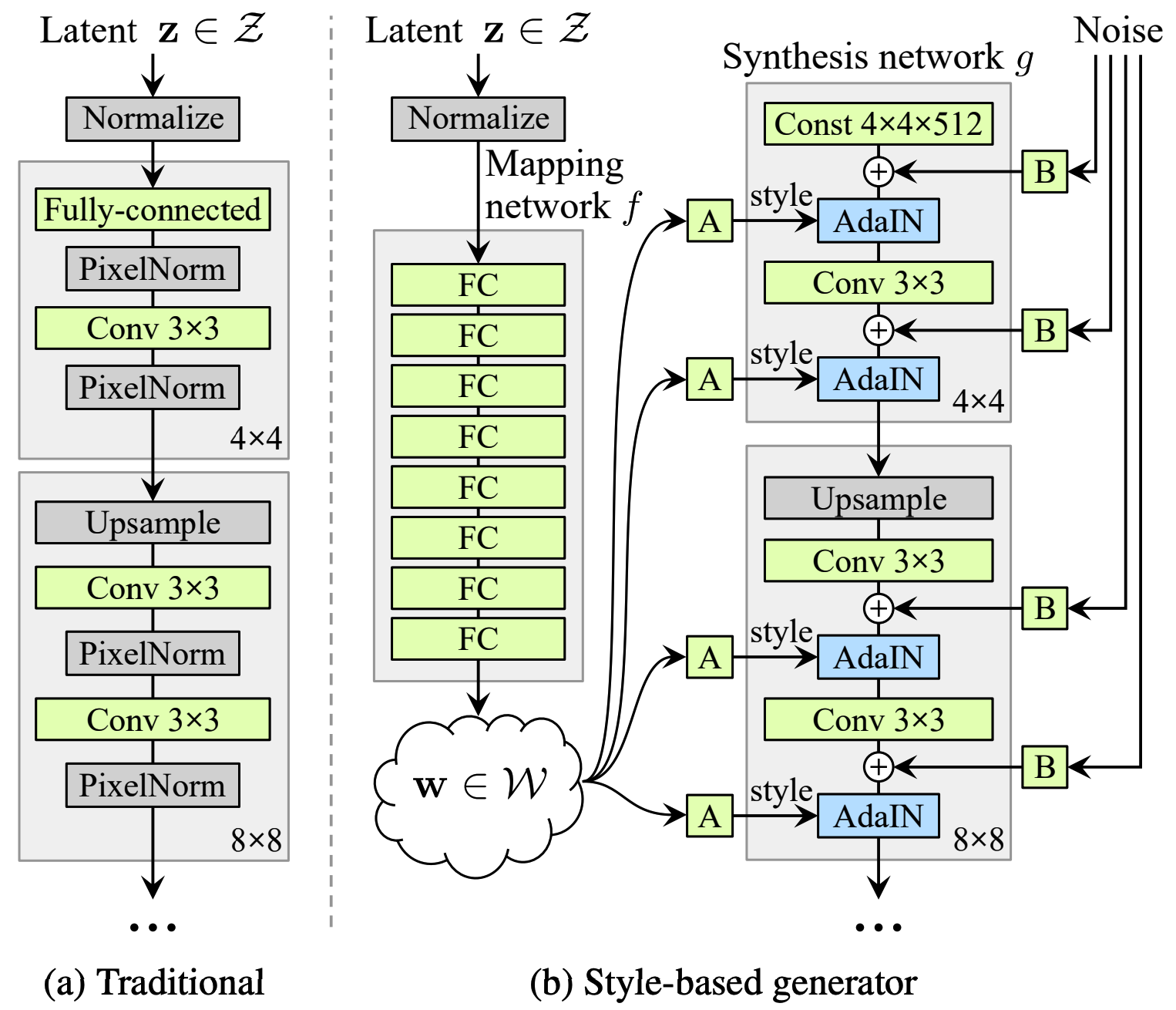

SBGAN changes the architecture of generator on the top of PGGAN.

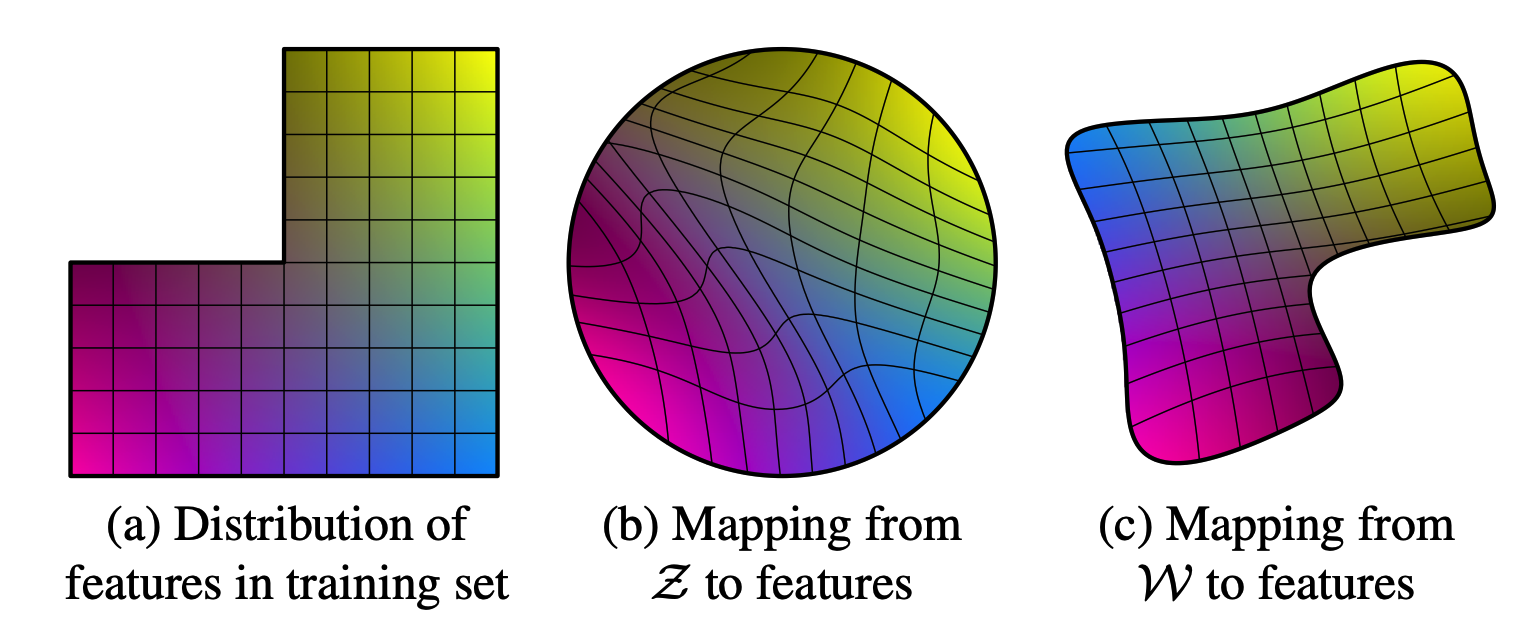

Instead of feeding a latent code z through the input layer of generator, SBGAN starts from learned constant. Latent codes are transformed with mapping network of 8-layer MLP and feed through adaptive instance normalization(AdaIN) to each layer. This paper argues that mapping network restores the real distribution of dataset.

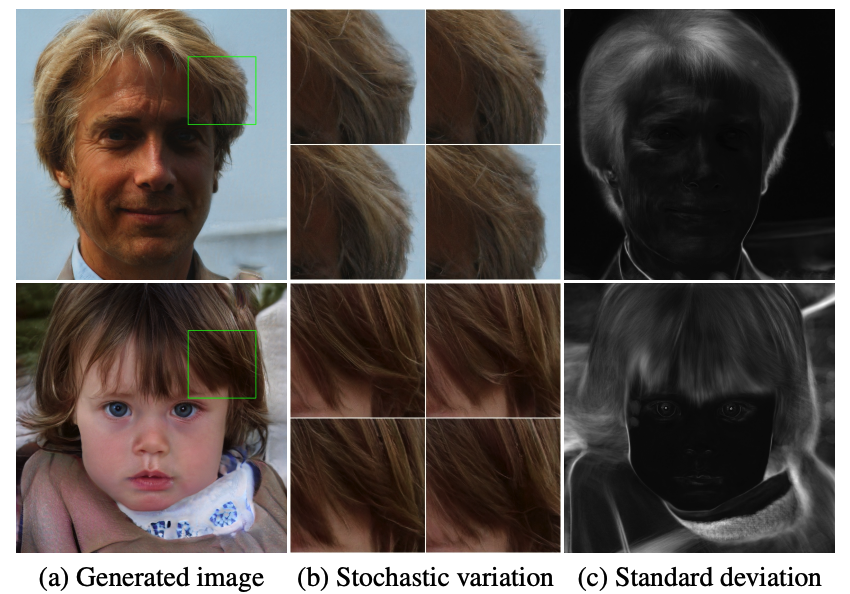

Finally, external noise inputs are added to each layer to provide stochasticity.

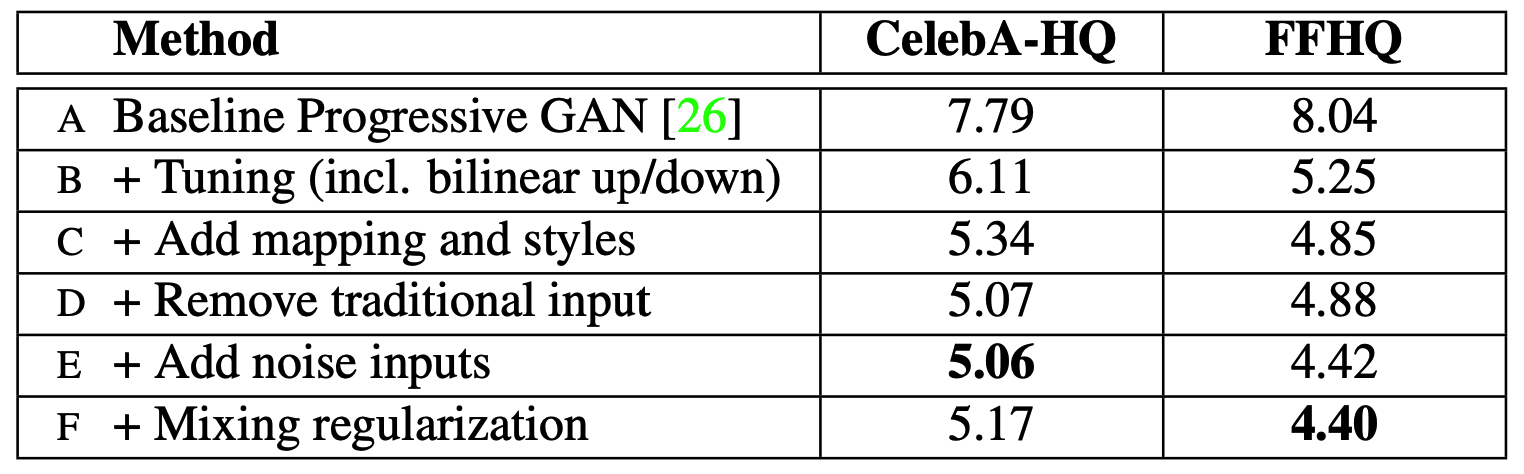

Comprehensive ablation study was conducted. To start with (A) PGGAN, (B) bilinear up/downsamplig operation helped improve the quality. (C) Mapping network, AdaIN and (D) constant input tensor further improved the results. (E) External nput noise and (F) mixing regularization which is generating a portion of inputs with mixing of two random latent codes. Truncation trick in W is used only for demonstration.

So?

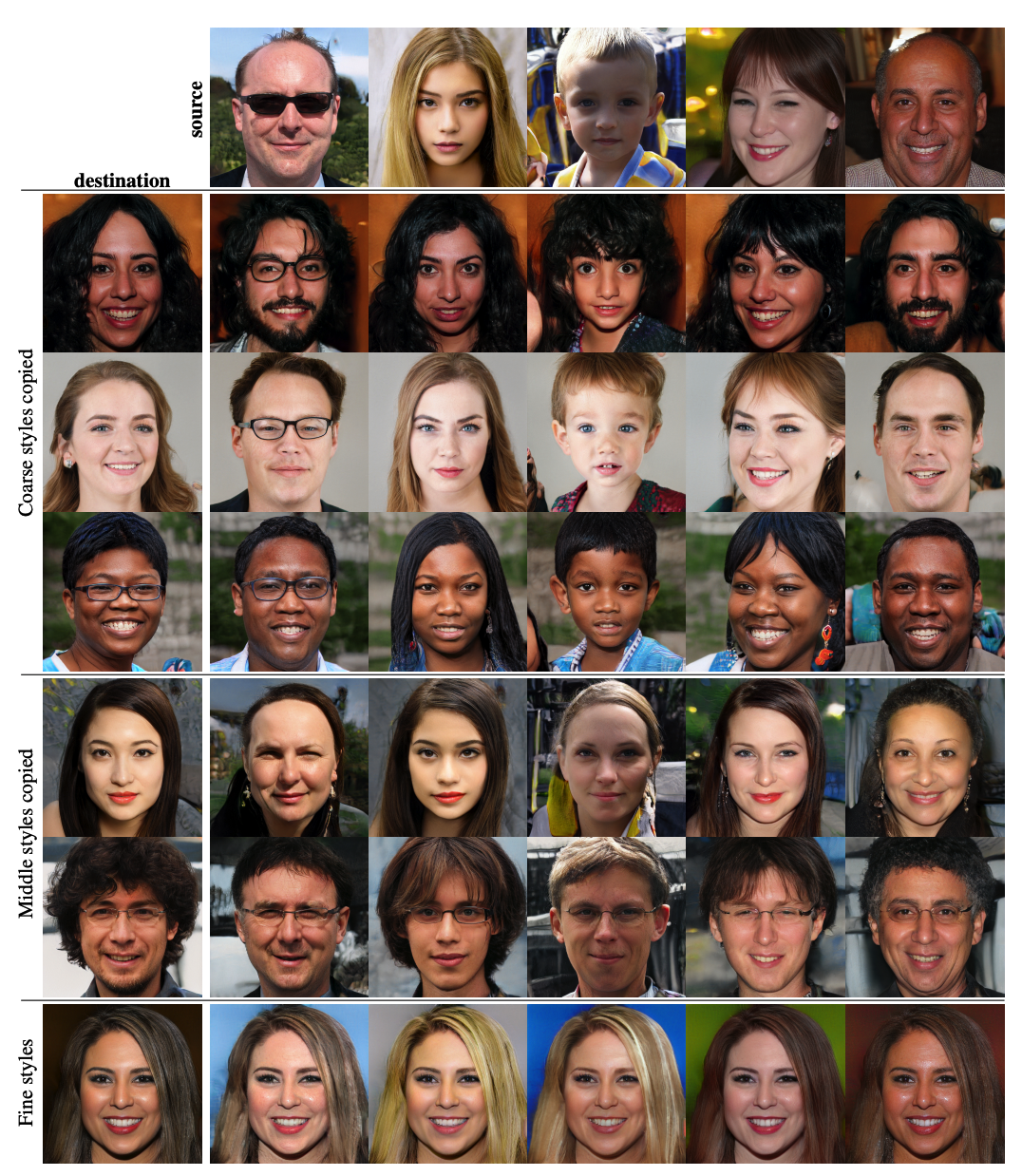

By mixing the latent codes in different scales of layers, different levels of style are mixed.

Controlling the input noise in different levels of layers can control stochastic variation in different scales.