Learning Visual Question Answering by Bootstrapping Hard Attention

in Studies on Deep Learning, Computer Vision

WHY?

Hard attention is relatively less explored than soft attention.

WHAT?

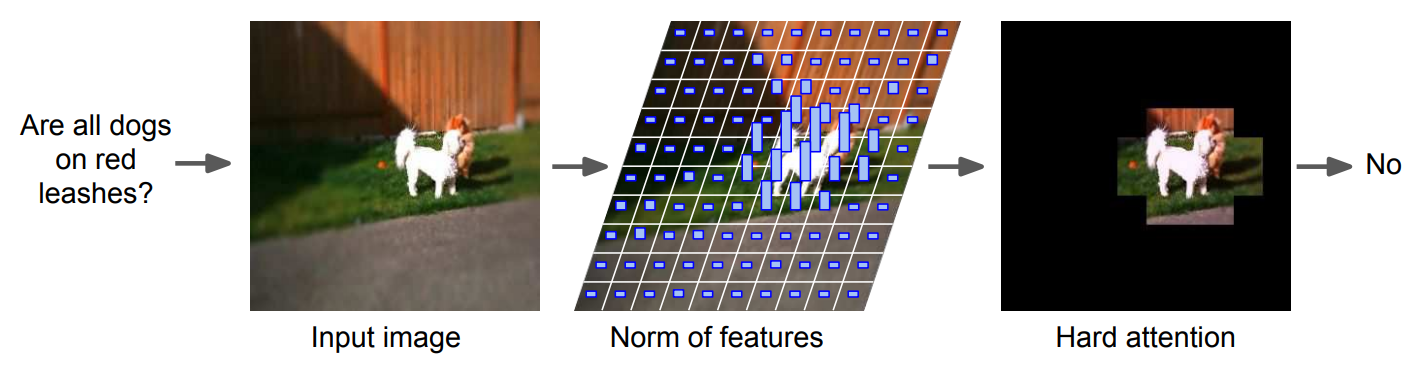

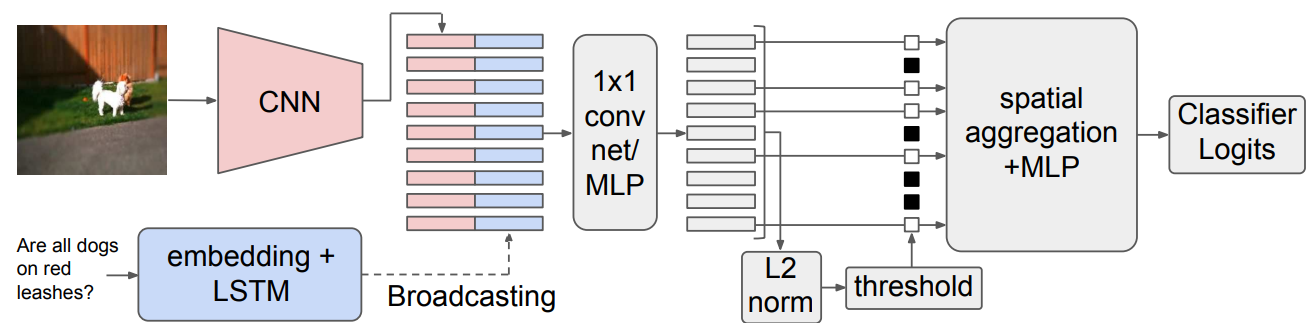

This paper showed that hard attention can be competitive and efficient as soft attention by bootstraping hard attention. In constrast to soft attention, hard attention discretely choose the point to attend.

The key idea is to use L2-Norm of the activations of each objects. Vanila Hard Attention Network(HAN) chooses top-k object with the largest L2-Norm. On the other hand, AdaHAN make a threshold \tau to select objects.

Self-attention can be applied to the end of the objects to provide inductive bias for relational reasoning.

So?

HAN achieved competitive result as soft-attention networks.